The software development landscape is experiencing its most profound transformation since the advent of the internet. Artificial intelligence, particularly generative AI and agentic systems, is fundamentally altering how code is written, reviewed, and deployed. However, recent research reveals that simply adopting AI tools isn’t enough, organizations must completely reimagine their development processes, team structures, and success metrics to capture AI’s full potential.

The Current State: Promising but Uneven Results

A comprehensive survey of nearly 300 publicly traded companies shows that while many organizations are seeing positive impacts from AI tools, a small subset is achieving particularly large gains. Top performers reported improvements of 16 to 30 percent in team productivity, customer experience, and time to market, with software quality improvements ranging from 31 to 45 percent.

Organizations tracking over 600 companies found that more than 60% experienced at least a 25% productivity improvement from AI, though truly transformative gains occurred when 80 to 100 percent of developers adopted these tools, resulting in improvements exceeding 110 percent.

These disparities highlight a critical insight: AI adoption alone doesn’t guarantee success. The organizations achieving breakthrough results are those fundamentally rethinking how software development works.

Two Foundational Shifts Driving Success

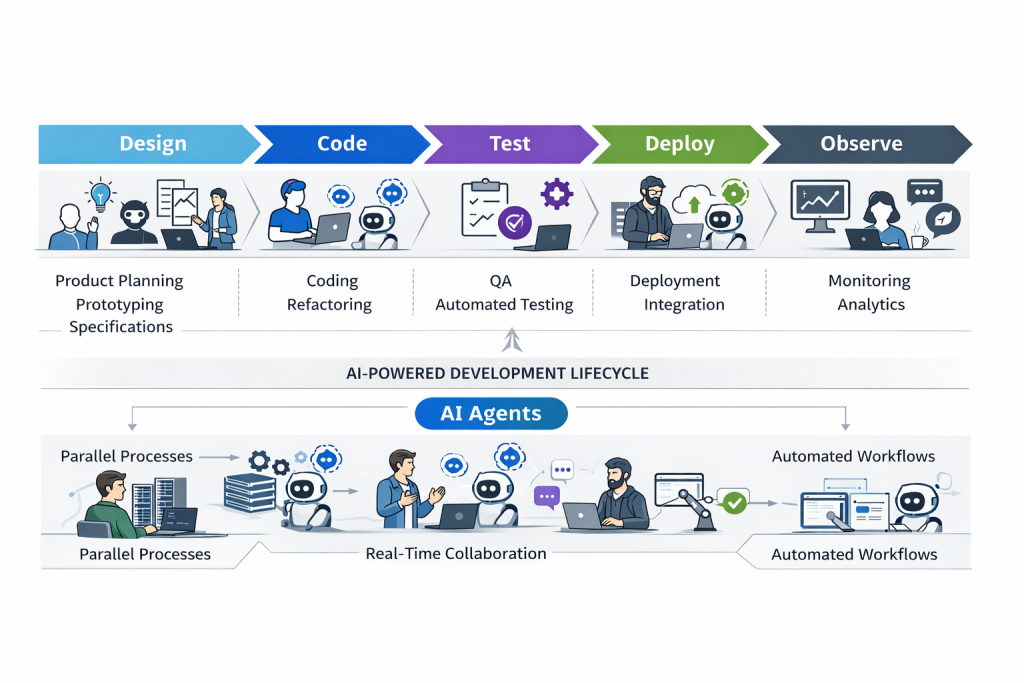

1. End-to-End Implementation Across the Development Lifecycle

High performing organizations embed AI across the entire product development lifecycle rather than limiting it to isolated use cases. They are six to seven times more likely than peers to scale to four or more use cases, with nearly two-thirds of leaders reporting four or more use cases at scale compared to just 10% of bottom performers.

This holistic approach spans from initial design and coding through testing, deployment, and adoption tracking. Rather than treating AI as a coding assistant, leading organizations integrate it into every stage of development.

Consider the example of Cursor, an AI-native startup that exemplifies this comprehensive approach. Their team operates as an internal lab for AI-driven engineering workflows, where developers trial solutions to their own challenges and productize those that gain traction. Engineers collaborate with AI agents in real time, including through voice, to refactor code and build features, while simultaneously triggering background agents to handle other tasks.

2. Creating AI-Native Roles and Responsibilities

The evolution of AI tools is reshaping core job functions in software development. More than 90% of surveyed software teams use AI for activities such as refactoring, modernization, and testing, saving an average of six hours per week. However, as engineering headcount growth slows, developers are expected to combine technical fluency with product, design, and business understanding.

This shift reflects a fundamental change in what developers do. When someone writes and refines a prompt, they’re effectively writing a specification by defining behavior, constraints, and outcomes that resembles architecture management or product management. Developer roles are beginning to overlap with those of product managers and architects.

Product managers now spend less time on feature delivery and more on design, prototyping, quality assurance, and responsible AI practices. Software engineers focus more on full-stack fluency, structured communication of specifications, and understanding architectural trade-offs.

Looking ahead, the nature of software work may transform dramatically. Many teams may operate as orchestrators of parallel and asynchronous AI agents, assigning workflows and shaping end-to-end logic while continuously verifying outputs.

Three Critical Enablers of AI Success

Beyond these foundational shifts, top-performing organizations reinforce their AI strategies with three essential practices:

1. Intensive, Personalized Training

While most companies offer on-demand courses, those investing in hands-on workshops and one-on-one coaching are much more likely to see measurable gains. 57% of top performers versus only 20% of bottom performers.

Effective training goes beyond basic tool usage. High-performing organizations design training that mirrors real development work, integrating AI into code reviews, sprint planning, and testing cycles so teams learn to apply AI in live contexts rather than simulations.

The rapid advancement of AI tools makes continuous learning essential. Static documentation or annual training sessions quickly become outdated. Leading firms have established internal “AI guilds” or “centers of enablement” that curate new use cases, share best practices, and provide on-demand mentoring.

2. Focused Measurement

High-performing organizations don’t focus solely on adoption metrics like tool usage frequency or code acceptance rates. Instead, they track outcomes, monitoring quality improvements (79%) and speed gains (57%).

As one industry leader notes, companies too often measure AI’s impact by counting how much code it produces rather than what that code achieves. Lines of code or AI contribution percentages don’t reveal whether output is secure, maintainable, or useful.

Current metrics can be misleading, knowing that 30% of code is written by AI doesn’t indicate whether that code is good or bad. Productivity encompasses maintainability, quality, and reduced rework, requiring better combinations of input and output metrics.

Effective measurement operates on three layers: adoption (tracking usage quantitatively and qualitatively), throughput and process efficiency (metrics like pull-request rate and cycle time), and outcomes (business objectives such as roadmap progress, defect rates, and customer impact).

3. Aligned Incentives and Change Management

Top performers embed AI adoption directly into performance evaluations. Nearly eight in ten link generative AI-related goals to both product manager and developer reviews, compared with just 10% of bottom performers for developers and none for product managers.

Leading organizations focus incentives on behaviors that drive impact rather than just usage. Goals are framed around contributions such as identifying automation opportunities, improving velocity through AI-enabled testing, or enhancing quality via model-assisted code review.

Emerging Challenges and Considerations: The Code Trust Bottleneck

While AI has moved beyond code generation being the bottleneck, the challenge now centers on clarity and trust. AI is literal rather than creative, making architecture and design more critical. Organizations face a “code trust” bottleneck where humans need to review and verify all AI-produced code.

Benchmarking reveals that each coding model has a distinct “personality,”some are verbose, some introduce security or maintainability issues, and some are elegant but brittle. Newer models solve problems better overall but use approximately three times as many lines of code as earlier versions, increasing complexity and introducing more potential bugs.

In the past, code review was something only top engineering companies practiced. Now, every large organization needs to make it part of their core discipline, identifying issues earlier in the development cycle, ideally inside the integrated development environment itself.

The Complexity of Enterprise Integration

Much of the hype focuses on “greenfield” examples, startups building from scratch. In reality, most companies have millions of lines of existing code plus high internal and external regulatory standards. That complexity slows adoption, though this isn’t a failure of technology but rather a matter of time for integration.

The concept of “vibe and verify” captures this challenge: organizations can generate code quickly but must verify it before deployment. Verification ensures code fits the environment, meets compliance requirements, and can be trusted.

Accountability and Trustworthiness

Accountability begins with defining what “trustworthy” means for an organization. A large manufacturer and a startup will have very different thresholds. Most enterprises care about explainability, transparency, and repeatability.

Using independent models for verification—for example, one model checking another, is emerging as a best practice. Organizations are uncomfortable with the same AI model evaluating its own output.

Traditional risks around intellectual property, security, and compliance still matter, but AI accelerates them. Code moves faster, so controls must be embedded earlier in the pipeline rather than relying on human sanity checks later.

The Future of Software Development: Systems-Level Thinking

Software productivity measurement requires “systems thinking.” The product development lifecycle is a flow, if one part accelerates, such as coding, but another lags, such as review or compliance, true improvement hasn’t occurred.

Even if coding becomes faster, this can shift bottlenecks elsewhere, such as requirements definition, testing, or governance. Without holistic change, gains at one point create friction downstream.

The Next Wave of Innovation

While most progress has occurred in IDE-based tools for individual developers writing code, the next wave will focus on code review and agentic systems that coordinate work across stages of the development lifecycle. Testing represents a particularly interesting frontier.

Organizations cannot fully automate quality without defining “truth,” and many legacy systems lack that specification. This represents a blocker for full-scale AI transformation, requiring new ways to formalize intent and redefine how “correctness” is expressed.

The Shift in Human Value

For decades, the assumption was that coding was the hard part. It turns out describing what to build is harder. As generative tools make writing code easy, defining the right intent, the specification, will become the core human task. This shift changes who adds value in the development process, rewarding clarity, systems thinking, and the ability to guide AI toward the right outcomes.

Timeline for Transformation

Industry leaders fundamentally believe the software development lifecycle will be completely redefined within three years. This transformation represents one of the biggest shifts since the invention of the internet, redefining not just technical processes but human roles, skills, and collaboration models.

In just the past year, AI coding benchmarks have nearly doubled, from 30 points to 55 points, though they still trail the overall intelligence index of general models by 20 points, demonstrating that AI coding tools still have room to grow smarter. Tools are becoming more powerful as they expand across the development lifecycle with stronger orchestration and tighter system integration.

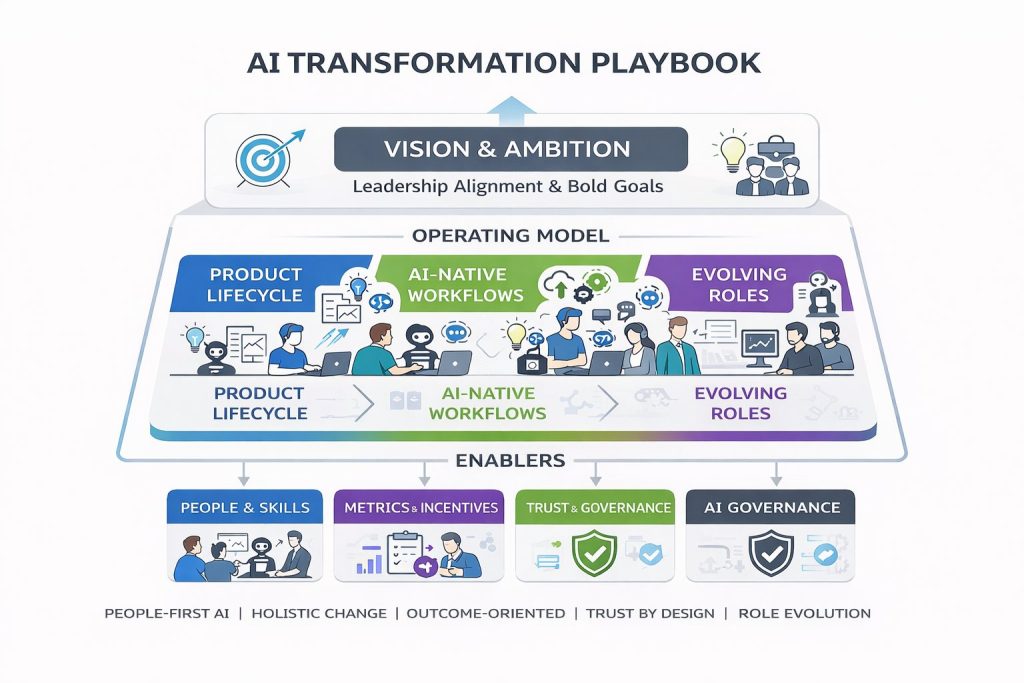

Strategic Recommendations for Organizations

Software organizations leading in leveraging AI for real impact follow three overarching steps: They set ambitious goals that unite leadership and energize the organization; they develop a holistic blueprint for the future operating model, tested and refined to fit their context; and they create a detailed roadmap that redefines team structures, workflows, metrics, and incentives to unlock productivity at scale.

Success requires more than implementing new tools. Organizations must:

- Embrace comprehensive change: Transform the entire product development lifecycle rather than treating AI as a point solution

- Invest in people: Provide intensive, continuous training that builds both technical skills and new ways of thinking about software development

- Measure what matters: Track business outcomes rather than superficial metrics like code volume

- Align incentives: Embed AI-enabled behaviors into performance systems and organizational culture

- Build trust frameworks: Establish clear standards for code quality, security, and compliance in an AI-accelerated environment

- Prepare for role evolution: Recognize that job functions will change as AI handles more routine tasks and humans focus on specification, orchestration, and validation

Conclusion

The integration of AI into software development represents a watershed moment for the industry. While the technology continues to advance rapidly, organizational adaptation remains the critical factor determining success. Companies that treat AI as merely another tool will see modest improvements. Those that fundamentally rethink their operating models—reimagining roles, processes, measurement, and team structures—will unlock transformative gains.

The next three years will likely see the software development lifecycle completely redefined. Organizations that begin this transformation now, learning through iteration and building the foundational capabilities for an AI-native future, will be positioned to thrive in this new landscape. The question is no longer whether AI will reshape software development, but rather which organizations will successfully navigate this transition and emerge as leaders in the AI-powered era of software creation.

This analysis draws on comprehensive research including surveys of nearly 300 publicly traded companies and interviews with industry leaders at the forefront of AI-driven software development, providing insights into both current best practices and emerging trends shaping the future of the field.