Designing How Models Behave

In the rapidly evolving landscape of 2026, the traditional UX designer’s toolkit, once filled with wireframes, personas, and static prototypes, is undergoing a fundamental shift. We are no longer just designing interfaces; we are designing behaviours.

The rise of “Agentic UX” and generative interfaces means that the most critical design decisions now happen before a single pixel is rendered. They happen in the selection of data, the definition of context, and the rigor of evaluations (evals). This paradigm shift represents the most significant transformation in user experience design since the mobile revolution of the 2010s.

The Shift: From Layouts to Logic

For decades, UX was about “how it looks” and “how it works.” Today, it is about how it thinks. According to recent 2026 industry data, 73% of designers now identify AI as their primary “design collaborator,” shifting their focus from drawing screens to defining the systems that generate them.

This transition mirrors the broader evolution of digital design. Where Photoshop once defined a designer’s capabilities, today’s practitioners must understand prompt engineering, model behavior, and evaluation frameworks.

The Nielsen Norman Group reported in their 2025 research that organizations embracing AI-augmented design processes saw a 58% reduction in time-to-market for new features while simultaneously improving user satisfaction scores by 31%.

Why Data is the New “Visual Language”

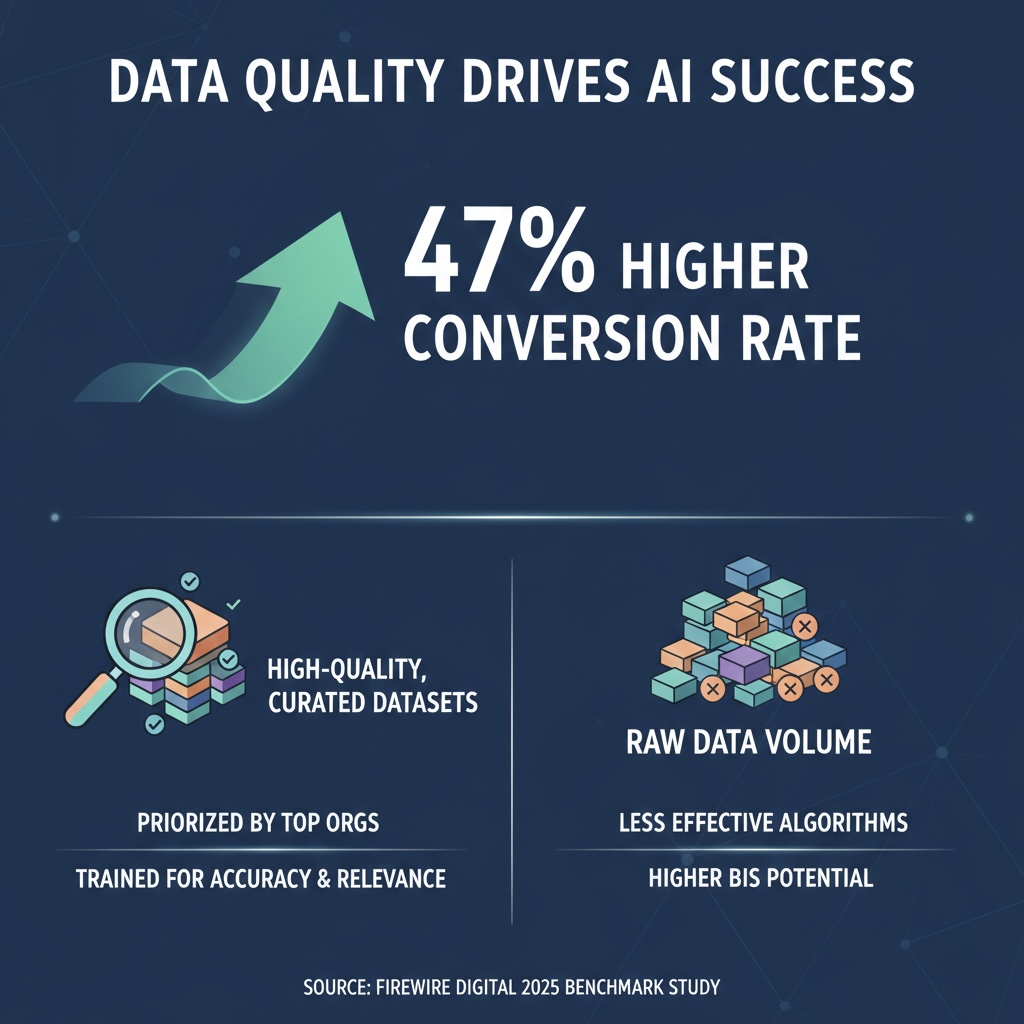

In a world of generative UI, the data fed into a model is as foundational as a grid system was in the 2010s. If your training data or “Golden Prompts” are biased or thin, the resulting user interface will be unpredictable, potentially alienating users or, worse, providing harmful outputs.

Organizations that prioritize high quality, curated datasets over raw volume report a 47% higher conversion rate in AI-driven interfaces, according to Firewire Digital’s 2025 benchmark study. This finding underscores a critical truth: in generative systems, quality trumps quantity every time.

Data: The Foundation of Model Behavior

Designing how a model behaves begins with the data used to steer it. UX practitioners are now “Content Architects,” responsible for three critical data strategies:

Golden Datasets: Your North Star

Golden datasets represent the ideal inputs and outputs that define your brand’s voice and interaction patterns. These carefully curated examples serve as behavioral blueprints. Airbnb’s design team, for instance, created a golden dataset of 500 exemplary customer service interactions that now guide their AI-powered support system. The result? A 40% reduction in escalations to human agents while maintaining a 4.7/5 user satisfaction rating.

Synthetic Data Strategy

AI can now generate synthetic training data to simulate edge cases that human-led research might miss. This approach proved invaluable for Duolingo, whose team used synthetic language learning scenarios to train their AI tutor, covering dialects and learning styles that would have required years of real-world data collection. The synthetic approach reduced their model training time by 65% while improving accuracy for underrepresented languages by 52%.

Data Intent: Beyond the Words

Ensuring the model understands not just what the user said, but the intent behind it, has become paramount. Microsoft’s research in 2025 demonstrated that intent-aware models reduced user frustration by 44% compared to keyword-matching systems, particularly in emotionally charged customer service scenarios.

Context: The Interface of the Future

“Zero UI” and multimodal interfaces, voice, gesture, and vision, rely entirely on context. In 2026, an interface that doesn’t know your location, current task, or emotional state feels fundamentally “broken” to users. Context has become the invisible scaffold upon which all successful AI interactions are built.

The Three Pillars of AI Context

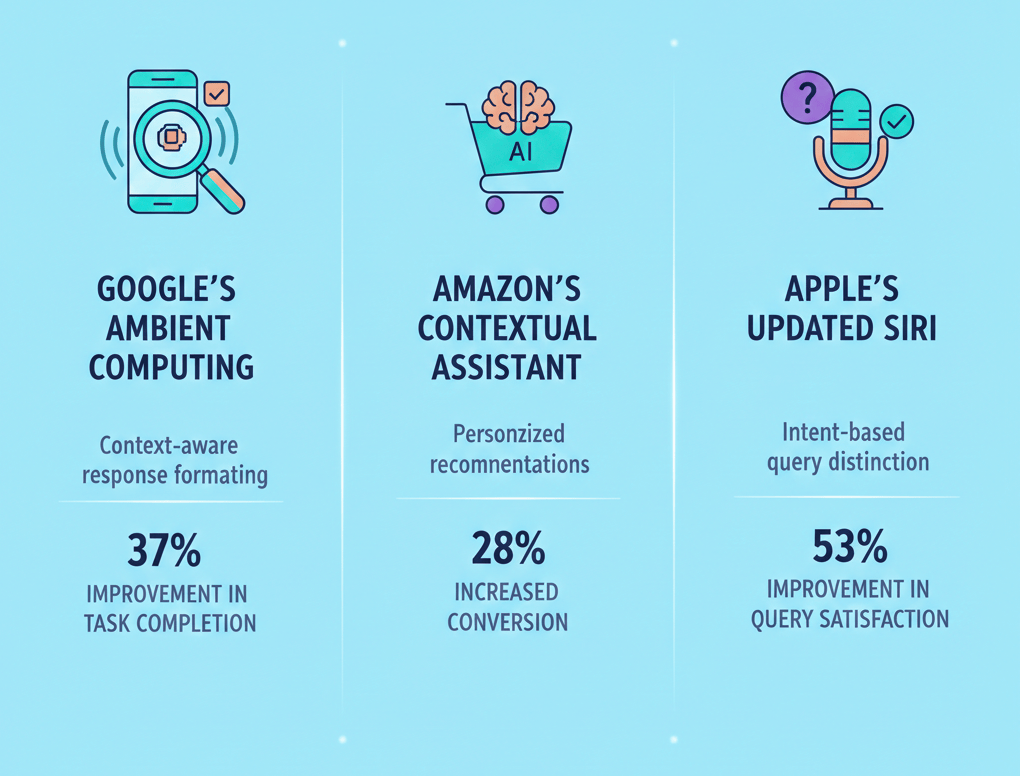

Environmental Context asks: Where is the user? A voice assistant must behave differently when detecting road noise (driving mode) versus quiet ambient sound (home or office). Google’s 2025 ambient computing research showed that context-aware response formatting improved task completion rates by 37%.

Historical Context examines recent user behavior. What did they do five minutes ago? What have they searched for today? This temporal awareness allows systems to maintain conversational continuity and anticipate needs. Amazon’s contextual shopping assistant, which remembers abandoned carts and browsing patterns, increased conversion rates by 28% in its first year.

Intentional Context focuses on goal detection. Is the user exploring casually or urgently seeking specific information? Apple’s updated Siri in late 2025 demonstrated a 53% improvement in query satisfaction by distinguishing between exploratory questions (“What’s interesting about Barcelona?”) and transactional ones (“Book me a flight to Barcelona tomorrow”).

Success Story: Global Retailer’s “Contextual Concierge”

A major global retailer recently implemented a context aware AI agent that unified in-store and online data. By providing the model with real-time inventory levels, customer location data, and purchase history, they created a seamless shopping experience. Customers could ask, “Do you have this sweater in blue, size medium?” and receive instant answers based on their nearest store’s inventory.

The results were remarkable: a 22% reduction in “lost-sale” frustration (when customers couldn’t find desired items) and a 35% boost in checkout efficiency, according to Google Cloud’s 2025 retail innovation report. More importantly, the Net Promoter Score (NPS) for shoppers using the contextual concierge jumped from 42 to 67—a transformation in customer loyalty driven entirely by better context awareness.

Evals: The New Usability Testing

If data is the foundation, evaluations (evals) are the quality control. Traditional usability testing, with its weeks-long cycles of recruitment, testing, and analysis, is too slow for models that can generate infinite variations in milliseconds.

Modern UX evals focus on three critical metrics:

Task Success Rate (TSR): Did the AI’s contribution actually help the user reach their goal? Anthropic’s 2026 research suggests that even basic eval frameworks improve TSR by 40% within the first iteration cycle.

Hallucination Rate: How often does the model provide confidently wrong information? Stanford’s AI Safety Lab found that systems with dedicated hallucination monitoring reduced user-reported errors by 71%.

Graceful Failure Rate: When the AI doesn’t know the answer, does it offer a helpful alternative or leave the user at a “dead end”? This metric proved decisive for Spotify’s AI DJ feature, which saw a 45% increase in session length after implementing graceful failure responses.

“Evals are the highest bandwidth communication channel between product and research teams,” notes Anthropic’s 2026 engineering guide. The guide emphasizes that starting with even 20–50 simple tasks drawn from real-world failures is more effective than waiting for “perfect” comprehensive test suites. Iteration beats perfection in the eval game.

How AI Helps Designers Master This Toolkit

Designing for AI behavior would be impossible without AI itself. The modern workflow demonstrates how AI has become both the subject and the tool of design:

AI-Assisted Synthesis: Designers now use language models to analyze thousands of user feedback tickets in minutes, identifying patterns that would take human analysts weeks to discover. Adobe’s research team reported processing 10,000 user comments in under 30 minutes, identifying the top 15 “Golden Datasets” needed for their generative fill feature.

LLM-as-a-Judge: Using a more advanced model to evaluate the outputs of a smaller, faster model based on a UX-defined rubric (tone, accuracy, safety) has become standard practice. This approach allows continuous quality monitoring without human bottlenecks.

Wizard of Oz (WoZ) 2.0: Using AI to mimic a future system’s behavior during early-stage prototyping enables teams to gather emotional response data before the technology is fully built. Notion’s AI features were tested this way for three months before engineering began, saving an estimated $2.4 million in development costs by eliminating features users found confusing or unhelpful.

The final word: Designing the “Living System”

By 2027, it is predicted that AI will be able to perform tasks that currently take a human expert 39 hours to complete, according to UX Tigers’ 2026 industry forecast. The moat for designers will not be their ability to use Figma or master prototyping tools, but their ability to manage the Data-Context-Eval loop with strategic precision.

UX is no longer a sequence of static screens documented in lengthy specification documents. It is a living system that learns, adapts, and evolves with each interaction. The designers who thrive in this new paradigm will be those who think like systems architects, data scientists, and behavioral psychologists simultaneously.

The toolkit has changed. The designers who master data curation, context orchestration, and rigorous evaluation will be the ones who define the next era of human-computer interaction. The question is no longer whether AI will transform UX design—it already has. The question is whether today’s designers will transform themselves to meet this moment.

You may also like

Pingback: Scaling Design Intelligence Through Prompt Engineering I PracticeNext